Ask·Me·Why

2025 · 2025 Competition

Category: Research

Project Overview

One Liner: Explain AI decisions by finding what makes each case unique within its neighborhood of similar instances, without ever peeking inside the model.

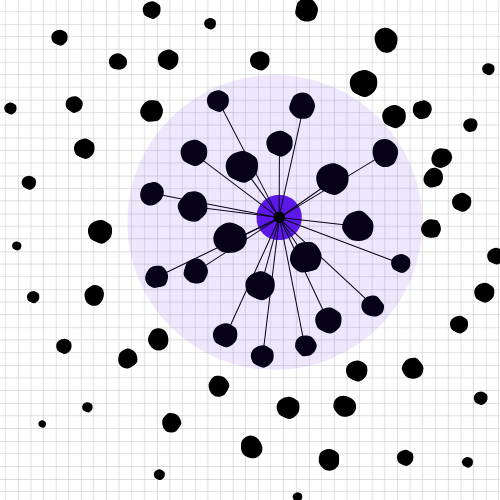

As machine learning models increasingly influence critical decisions in healthcare, finance, and legal domains, the need for transparent explanations has become paramount. Traditional explainability tools like SHAP, LIME, and XGBoost require direct model access and can produce inconsistent explanations across different models. We present Ask·Me·Why, a novel model-agnostic framework that explains individual predictions by analyzing feature distribution differences between local neighborhoods and global patterns.

Our approach uniquely leverages embeddings to identify semantically similar instances and applies proximity-weighted analysis to identify the most distinctive features for each prediction. By comparing how features behave in an instance's neighborhood versus the entire dataset, Ask·Me·Why reveals why specific predictions occur without requiring access to model internals. Crucially, we weight instances by proximity, the closer they are to the target instance the more they influence the explanation, mirroring how domain experts prioritize the most similar cases.

Video available at this link.

Screenshots

0 image(s)No screenshots uploaded yet.